ICM Overview

As of release 2023.3 of InterSystems IRIS, InterSystems Cloud Manager (ICM) is deprecated; it will be removed from future versions.

This document describes the use of InterSystems Cloud Manager (ICM) to deploy InterSystems IRIS® data platform configurations in public and private clouds and on preexisting physical and virtual clusters.

This page explains what InterSystems Cloud Manager (ICM) does, how it works, and how it can help you deploy InterSystems IRIS data platform configurations on cloud, virtual, and physical infrastructure. (For a brief introduction to ICM including a hands-on exploration, see InterSystems IRIS Demo: InterSystems Cloud Manager.)

Benefits of InterSystems Cloud Manager

InterSystems Cloud Manager (ICM) provides you with a simple, intuitive way to provision cloud infrastructure and deploy services on it. ICM is designed to bring you the benefits of infrastructure as code (IaC), immutable infrastructure, and containerized deployment of your InterSystems IRIS-based applications, without requiring you to make major investments in new technology and the attendant training and trial-and-error configuration and management.

ICM makes it easy to provision and deploy the desired InterSystems IRIS configuration on Infrastructure as a Service (IaaS) public cloud platforms, including Google Cloud Platform, Amazon Web Services, Microsoft Azure, and Tencent Cloud. Define what you want in plain text configuration files and use the simple command line interface to direct ICM; ICM does the rest, including provisioning your cloud infrastructure and deploying your InterSystems IRIS-based applications on that infrastructure in Docker containers.

ICM codifies APIs into declarative configuration files that can be shared among team members like code, edited, reviewed, and versioned. By executing the specifications in these files, ICM enables you to safely and predictably create, change, and improve production infrastructure on an ongoing basis.

Using ICM lets you take advantage of the efficiency, agility, and repeatability provided by virtual and cloud computing and containerized software without major development or retooling. The InterSystems IRIS configurations ICM can provision and deploy range from a stand-alone instance, through a distributed cache cluster of application servers connected to a data server, to a sharded cluster of data and compute nodes. ICM can deploy on existing virtual and physical clusters as well as infrastructure it provisions.

Even if you are already using cloud infrastructure, containers, or both, ICM dramatically reduces the time and effort required to provision and deploy your application by automating numerous manual steps based on the information you provide. And the functionality of ICM is easily extended through the use of third-party tools and in-house scripting, increasing automation and further reducing effort.

Each element of the ICM approach provides its own advantages, which combine with ach other:

-

Configuration file templates allow you to accept default values provided by InterSystems for most settings, customizing only those required to meet your specific needs.

-

The command line interface allows you to initiate each phase of the provisioning and deployment process with a single simple command, and to interact with deployed containers in a wide variety of ways.

-

IaC brings the ability to quickly provision consistent, repeatable platforms that are easily reproduced, managed, and disposed of.

-

IaaS providers enable you to utilize infrastructure in the most efficient manner — for example, if you need a cloud configuration for only a few hours, you pay for only a few hours — while also supporting repeatability, and providing all the resources you need to go with your host nodes, such as networking and security, load balancers, and storage volumes.

-

Containerized application deployment means seamlessly replaceable application environments on immutable software-provisioned infrastructure, separating code from data and avoiding the risks and costs of updating the infrastructure itself while supporting Continuous Integration/Continuous Deployment (CI/CD) and a DevOps approach.

Containers Support the DevOps Approach

ICM exploits these advantages to bring you the following benefits:

-

Automated provisioning and deployment, and command-line management, of large-scale, cloud-based InterSystems IRIS configurations.

-

Integration of existing InterSystems IRIS and InterSystems IRIS-based applications into your enterprise’s DevOps toolchain.

-

Stability, robustness, and minimization of risk through easy versioning of both the application and the environment it runs in.

-

Elastic scaling of deployed InterSystems IRIS configurations through rapid reprovisioning and redeployment.

If you prefer not to work with Docker containers, you can use ICM to provision cloud infrastructure and install noncontainerized InterSystems IRIS instances on that infrastructure, or to install InterSystems IRIS on existing infrastructure. For more information about using ICM’s containerless mode, see Containerless Deployment.

The InterSystems Cloud Manager Application Lifecycle

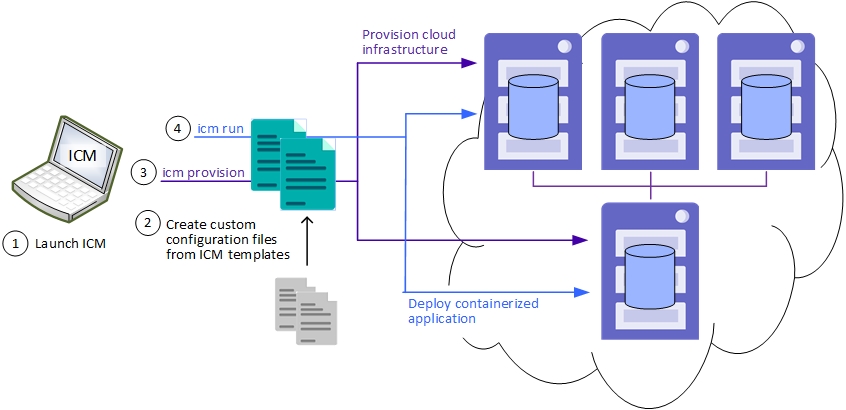

The role of ICM in the application lifecycle, including its two main phases, provision and deploy, is shown in the following illustration:

Define Goals

ICM’s configuration files, as provided, contain almost all of the settings you need to provide to provision and deploy the InterSystems IRIS configuration you want. Simply define your desired configuration in the appropriate file, as well as specifying some details such as credentials (cloud server provider, SSH, TLS) , InterSystems IRIS licenses, types and sizes of the host nodes you want, and so on. (See Define the Deployment for details.)

In this document, the term host node is used to refer to a virtual host provisioned either in the public cloud of one of the supported cloud service providers or in a private cloud using VMware vSphere.

Provision

ICM supports four main provisioning activities: creating (provisioning), configuring, modifying, and destroying (unprovisioning) host nodes and associated resources in a cloud environment.

ICM carries out provisioning tasks by making calls to HashiCorp’s Terraform. Terraform is an open source tool for building, changing, and versioning infrastructure safely and efficiently, and is compatible with both existing cloud services providers and custom solutions. Configuration files describe the provisioned infrastructure. (See Provision the Infrastructure for details.)

Although all of the tasks could be issued as individual Terraform commands, executing Terraform jobs through ICM has the following advantages over invoking Terraform directly:

| Terraform Executed Directly | Terraform Executed by ICM |

|---|---|

| Executes provisioning tasks only, cannot integrate provisioning with deployment and configuration | Coordinates all phases, including in elastic reprovisioning and redeployment (for example adding nodes to the cluster infrastructure, then deploying and configuring InterSystems IRIS on the nodes to incorporate them into the cluster) |

|

Configures each type of node in sequence, leading to long provisioning times |

Runs multiple Terraform jobs in parallel to configure all node types simultaneously, for faster provisioning |

|

Does not provide programmatic access (has no API) |

Provides programmatic access to Terraform |

|

Defines the desired infrastructure in the proprietary HashiCorp Configuration Language (HCL) |

Defines the desired infrastructure in a generic JSON format |

ICM also carries out some postprovisioning configuration tasks using SSH in the same fashion, running commands in parallel on multiple nodes for faster execution.

Deploy

ICM deploys InterSystems IRIS images in Docker containers on the host nodes it provisions. These containers are platform-independent and fully portable, do not need to be installed, and are easily tunable. ICM itself is deployed in a Docker container. A containerized application runs natively on the kernel of the host system, while the container provides it with only the elements needed to run it and make it accessible to the required connections, services, and interfaces — a runtime environment, the code, libraries, environment variables, and configuration files.

Deployment tasks are carried out by making calls to Docker. Although all of the tasks could be issued as individual Docker commands, executing Docker commands through ICM has the following advantages over invoking Docker directly:

-

ICM runs Docker commands across all machines in parallel threads, reducing the total amount of time to carry out lengthy tasks, such as pulling (downloading) images.

-

ICM can orchestrate tasks, such as rolling upgrades, that have application-specific requirements.

-

ICM can redeploy services on infrastructure that has been modified since the initial deployment, including upgrading or adding new containers.

To learn how to quickly get started running an InterSystems IRIS container on the command line, see InterSystems IRIS Basics: Running an InterSystems IRIS Container; for detailed information about deploying InterSystems IRIS and InterSystems IRIS-based applications in containers using methods other than ICM, see Running InterSystems IRIS in Containers.

Manage

ICM commands let you interact with and manage your infrastructure and containers in a number of ways. For example, you can run commands on the cloud hosts or within the containers, copy files to the hosts or the containers, upgrade containers, and interact directly with InterSystems IRIS.

For complete information about ICM service deployment and management, see Deploy and Manage Services.

Additional Automated Deployment Methods for InterSystems IRIS

In addition to the ICM, InterSystems IRIS data platform provides the following methods for automated deployment.

Automated Deployment Using the InterSystems Kubernetes Operator (IKO)

KubernetesOpens in a new tab is an open-source orchestration engine for automating deployment, scaling, and management of containerized workloads and services. You define the containerized services you want to deploy and the policies you want them to be governed by; Kubernetes transparently provides the needed resources in the most efficient way possible, repairs or restores the deployment when it deviates from spec, and scales automatically or on demand. The InterSystems Kubernetes Operator (IKO) extends the Kubernetes API with the IrisCluster custom resource, which can be deployed as an InterSystems IRIS sharded cluster, distributed cache cluster, or standalone instance, all optionally mirrored, on any Kubernetes platform.

The IKO isn’t required to deploy InterSystems IRIS under Kubernetes, but it greatly simplifies the process and adds InterSystems IRIS-specific cluster management capabilities to Kubernetes, enabling tasks like adding nodes to a cluster, which you would otherwise have to do manually by interacting directly with the instances.

For more information on using the IKO, see Using the InterSystems Kubernetes OperatorOpens in a new tab.

Automated Deployment Using Configuration Merge

The configuration merge feature, available on Linux and UNIX® systems, lets you vary the configurations of InterSystems IRIS containers deployed from the same image, or local instances installed from the same kit, by simply applying the desired declarative configuration merge file to each instance in the deployment.

This merge file, which can also be applied when restarting an existing instance, updates an instance’s configuration parameter file (CPF), which contains most of its configuration settings; these settings are read from the CPF at every startup, including the first one after an instance is deployed. When you apply configuration merge during deployment, you in effect replace the default CPF provided with the instance with your own updated version.

Using configuration merge, you can deploy individual instances or groups of instances with exactly the configuration you want. To deploy complex architectures, you can call separate merge files for the different types of instances involved. , For example, to deploy a sharded cluster, you would sequentially deploy data node 1, then the remaining data nodes, then (optionally) the compute nodes.

As described in Deploying with Customized InterSystems IRIS Configurations, you can specify a configuration merge file to be applied during the ICM deployment phase using the UserCPF parameter. The IKO, described in the preceding section, also incorporates the configuration merge feature.

For information about using configuration merge in general and to deploy mirrors in particular, see Automating Configuration of InterSystems IRIS with Configuration Merge.